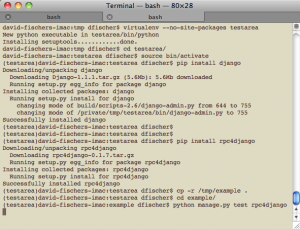

When I packaged up the first version of rpc4django and sent it to the package index (pypi) with distutils, I thought I was doing everything right. I’d packaged my django application (if you’re unclear on applications vs. projects, see the terminology) in such a way that it could be used with a variety of projects and required almost no configuration to get off the ground. I filled out all the metadata in the distutils setup() function and I made sure that my package could be installed via easy_install. I even implemented a fix that I stole from Django’s setup.py to make sure the “install data” (including Django templates) get installed in the right place on the flawed Mac OS X versions of python. Little did I know, I was just getting started.

Easy Install

Easy install is a pretty convenient way to install packages and making your packages easier to install will make sure that they get installed more frequently by more users. Provided you have used distutils or setuptools for your python package and have a working setup.py, you should be able to easily upload your package to the python package index and install it with easy install. Where easy install doesn’t shine, however, is how it automatically creates .egg files out of packages.

Making eggs out of libraries seems like a great idea on paper, but when a Django application is packaged as an egg, the default settings.py TEMPLATE_LOADERS will not be able to load it. The additional loader django.template.loaders.eggs.load_template_source must be enabled. Easy install will only create an egg if setuptools “determines” that it can safely create it. Alternatively, the zip_safe setting can be set to False in the setuptools.setup() function. To make it easier on the users, making the easy install unpack all your templates (one day setup tools will identify Django apps) will require less configuration and get more users.

|

|

try: from setuptools import setup except: from distutils.core import setup setup( zip_safe = False, #... ) |

This issue, which I noticed after the first rpc4django release will be fixed in the next release in a few days.

Package Index (Pypi)

I got my module into pypi and then I realized that there’s a lot of issues that go with it. I made sure that setuptools/distutils summary and description were set, but until I read the somewhat erroneously named cheeseshop tutorial, I didn’t understand that pypi sometimes subtly and sometimes overtly steers people towards “better” packages.

Start with the distutils tutorial on distributing packages.This will show you what needs to go into the setup.py and how to register, which really is the first step. However, people browsing pypi can come across a well documented library with installation instructions and installers directly on the pypi page like setuptools, or they come across a listing that is little more than link to the package’s webpage. To get that pretty documentation, you have to spend a few minutes to learn reST and that has to be set into your long_description in setup.py. To steal a good tip from setuptools, put the structured text in the README and read it into your setup.py.

Cheesecake

The last aspect for today has to do with searching for packages on pypi. When you search, the results are returned based on a “score”. At first, like other search engines, I thought this score might be some sort of relevance number related to the search. It isn’t. It is the cheesecake index. This score is based on the quality (Kwalitee) of the package [Edit: apparently the score is based on relevance and the score listed is not based on the cheesecake index]. If python setup.py install works with your package, it helps your score. If you have unit tests and a docs/ directory, you get some more points. If you get a good pylint score or your code complies with PEP8, you get some more.

Overall, it’s not a bad idea. Linting and pep8 and a changelog file don’t make a package great, but easy installation, good documentation and unit tests are probably pretty strongly correlated with a higher quality package.

Upcoming RPC4Django Release

By my own calculations, rpc4django-0.1.0 got about 54% of the cheesecake index and that merits the #7 spot when people search for “rpc”. Even before I knew about cheesecake, I was running pep8 and pylint and I’m pretty good about installation, documentation and unit testing. Expect improvements in the next version along with the following features (which will be in my CHANGELOG for an extra couple cheesecake points):

- reST documentation instead of what I rolled myself

- the rpc method summary could use some reST

- fix my easy install problems with templates

- package improvements, including to my pypi page

- test compatibility with more systems and python versions — it looks good down to 2.4, but there are some unit test failures related to the way exceptions are presented with repr()

- analyzing post data to see if it is json or xml to improve the dispatching library compatibility — it’ll still use content type if it is set properly

- if I get a chance, I’ll add a javascript library that allows testing the methods directly from the method summary