When I first showed Pip, the Python package installer, to a coworker a few years ago his first reaction was that he didn’t think it was a good idea to directly run code he downloaded from the Internet as root without looking at it first. He’s got a point. Paul McMillan dedicated part of his PyCon talk to this subject.

Python package management vs. Linux package management

To illustrate the security concerns, it is good to contrast how Python modules are usually installed with how Apt or Yum do it for Linux distributions. Debian and Redhat distros usually pre-provision the PGP keys for their packages with the distribution. Provided you installed a legitimate Linux distribution, you get the right PGP keys and every package downloaded through Apt/Yum is PGP checked. This means that the package is signed using private key for that distribution and you can verify that the exact package was signed and has not been modified. The package manager checks this and warns you when it does not match.

Pip and Easy Install don’t do any of that. They download packages in plaintext (which would be fine if every package was PGP signed and checked) and they download the checksums of the package in plaintext. If you manually tell Pip to point to a PyPI repository over HTTPS (say crate.io), it does not check the certificate. If you are on an untrusted network, it would not be tough to simply intercept requests to PyPI, download the package, add malicious code to setup.py and recalculate the checksum before returning the new malicious package on to be downloaded.

I think the big users of Python like the Mozillas of the world run their own PyPI servers and only load a subset of packages into it. I’ve heard of other shops making RPMs or DEBs out of Python packages. That’s what I often do. It lets you leverage the infrastructure of your distribution and the signing and checking infrastructure is already there. However, if you don’t want to do that, you can always PGP sign and verify your packages which is what the rest of this post is about.

Verifying a package

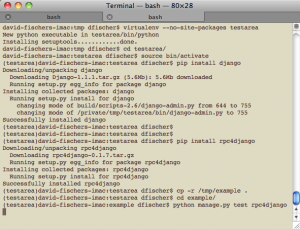

There are relatively few packages on the cheeseshop (PyPI) that are PGP signed. For this example, I’ll use rpc4django, a package I release, and Gnu Privacy Guard (GPG), a PGP implementation. The PGP signature of the package (rpc4django-0.1.12.tar.gz.asc) can be downloaded along with the package (rpc4django-0.1.12.tar.gz). If you simply attempt to verify it, you’ll probably get a message like this:

|

1 2 3 4 |

% gpg --verify rpc4django-0.1.12.tar.gz.asc rpc4django-0.1.12.tar.gz gpg: Signature made Mon Mar 12 15:14:28 2012 PDT using RSA key ID A737AB60 gpg: Can't check signature: public key not found |

This message lets you know that the signature was made using PGP at the given date, but without the public key there is no way to verify that this package has not been modified since the author (me) signed it. So the next step is to get the public key for the package:

|

1 2 3 4 5 6 |

% gpg --search-keys A737AB60 gpg: searching for "A737AB60" from hkp server keys.gnupg.net (1) David Fischer <djfische@gmail.com> 2048 bit RSA key A737AB60, created: 2011-11-20 Keys 1-1 of 1 for "0xA737AB60". Enter number(s), N)ext, or Q)uit > q |

If you hit “1”, you will import the key. Re-running the verify command will now properly verify the package:

|

1 2 3 4 |

% gpg --verify rpc4django-0.1.12.tar.gz.asc rpc4django-0.1.12.tar.gz gpg: Signature made Mon Mar 12 15:14:28 2012 PDT using RSA key ID A737AB60 gpg: Good signature from "David Fischer <djfische@gmail.com>" |

The fact that ten different Python modules will probably be signed by ten different PGP keys is a problem and I’m not sure there’s a way to make that easier. In addition, my key is probably not in your web of trust; nobody who you trust has signed my public key. So when you verify the signature, you will probably also see a message like this.

|

1 2 |

gpg: WARNING: This key is not certified with a trusted signature! gpg: There is no indication that the signature belongs to the owner. |

This means that I need to get my key signed by more people and you need to expand your web of trust.

Signing a package

Signing a package is easy and it is done as part of the upload process to PyPI. This assumes you have PGP all setup already. I haven’t done this in about a month so I hope the command is right.

|

1 |

% python setup.py sdist upload --sign |

There are additional options like the correct key to sign the package, but the signing part is easy.

However, how many people actually verify the signature? Almost nobody. The package managers (Pip/EasyInstall) don’t and you probably just use one of them.

The future of Python packaging

So what can we do? I tried to work on this at the PythonSD meetup but I didn’t get very far partially because it is a tough problem and partly because there was more chatting than coding. As a concrete proposal, I think we need to get PGP verification into Pip and solve issue #425. This probably means making Python-gnupg a prerequisite for Pip (at least for PGP verification). Step two is to add certificate verification. Python3 already supports certificate checking through OpenSSL. Python2 might have to use something like the Requests library. Step three is to get a proper certificate on PyPI.

Edit: Updated command to upload signed package

Edit (January 2018): This 5 year old post is massively outdated. I recommend taking a look at the Python packaging and distributing docs which are much better now. The commands I typically run to distribute a package are:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# Remove any old distributions % rm -rf dist/ # Create new tar.gz and wheel files # Only create a universal wheel if py2/py3 compatible and no C extensions % python setup.py bdist_wheel --universal # Sign the distributions % gpg --detach-sign -a dist/* # Upload to PyPI % twine upload dist/* |