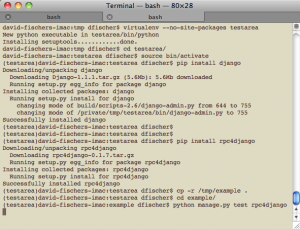

In a previous post, I promised to write about Pip and Virtualenv and I’m now finally making good. Others have done this before, but I think I have a little to add. If you develop a Python module and you don’t test it with virtualenv, don’t make your next release until you do.

Configuring the environment

Virtualenv creates a Python environment that is segregated from your system wide Python installation. In this way, you can test your module without any external packages mucking up the result, add different versions of dependency packages and generally verify the exact set of requirements for your package.

To create the virtual environment:

|

1 |

% virtualenv --no-site-packages testarea |

This creates a directory testarea/ that contains directories for installing modules and a Python executable. Using the virtual environment:

|

1 2 3 |

% cd testarea % source bin/activate |

Sourcing activate will set environment variables so that only modules installed under testarea/ are used. After setting up the environment, any desired packages can be installed (from pypi):

|

1 |

(testarea) % pip install rpc4django |

Packages can also be uninstalled, specific versions can be installed or packages can be installed from the file system, URLs or directly from source control:

|

1 2 |

(testarea) % pip uninstall rpc4django (testarea) % pip install rpc4django==0.1.6 |

Pip is worth using over easy_install for its uninstall capabilities alone, but I should mention that pip is actively maintained while setuptools is mostly dead.

When you’re done with the virtual environment, simply deactivate it:

|

1 |

(testarea) % deactivate |

Do it for the tests

While the segregated environment that virtualenv provides is extremely well suited to getting the correct environment up and running, it is just as well suited to testing your application under a variety of different package configurations. With pip and virtualenv, testing your application under three different versions of Django is a snap and it doesn’t affect your system environment in the slightest.

Dependencies made easy

My favorite feature of pip is the ability to create a requirements file based on a set of packages installed in your virtual environment (or your global site-packages). Creating a requirements file can be done automatically using the freeze command for pip:

|

1 2 3 4 5 |

(testarea) % pip freeze > requirements.txt (testarea) % more requirements.txt Django==1.1.1 rpc4django==0.1.7 wsgiref==0.1.2 |

Wsgiref will always appear in pip’s output. It is a standard library package that includes package metadata. The requirements file is used as follows:

|

1 |

% pip install -r requirements.txt |

The requirements file can be version controlled both to aid in installation and to capture the exact versions of your dependencies directly where they are used rather than after the fact in documentation that can easily become out of date. The requirements file can be used to rebuild a virtual environment or to deploy a virtual environment into the machine’s site-packages. Pip and virtualenv are exceptionally easy to use and there’s really no excuse for a Python packager not to use them.

Note: I’m working on a fairly large sized application for work. When it is finished, I will release a post-mortem that will also function as an update to my post about packaging and distributing.